#403 - Daniel Langkilde - CEO, Kognic

“Even if we can train a self-supervised, end-to-end self-driving agent, it does not mean it’s going to be a good driver - it might just be able to imitate in some cases. But as soon as the experience it has deviates too much from what it has seen, it is unclear what would happen - that is really the hard problem that we underestimate all the time.”

Autonomous Driving is a big enough paradigm shift. But after years of research and billions of dollars spent trying to get cars to drive themselves, perhaps it is time for a paradigm shift within a paradigm shift. What might this look like?

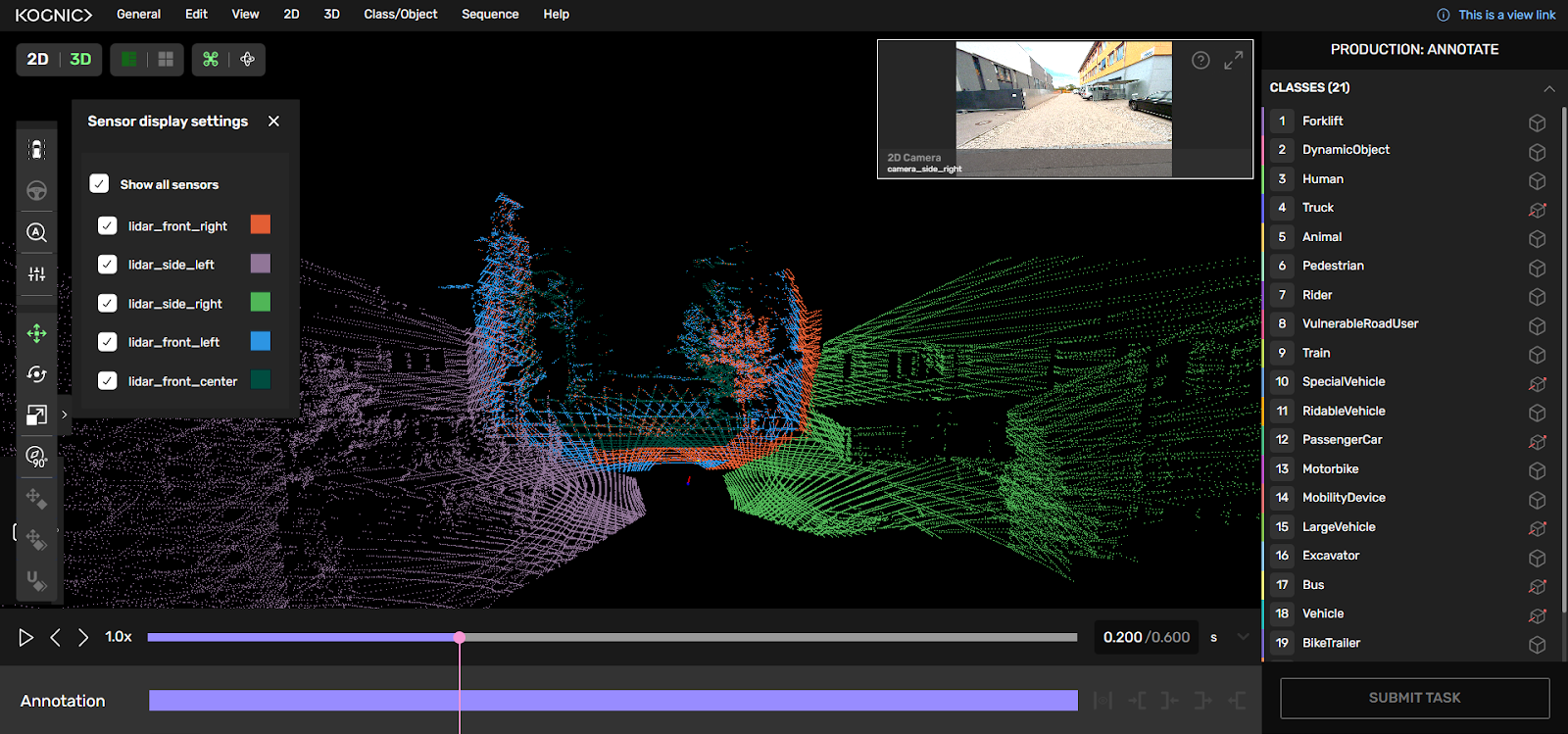

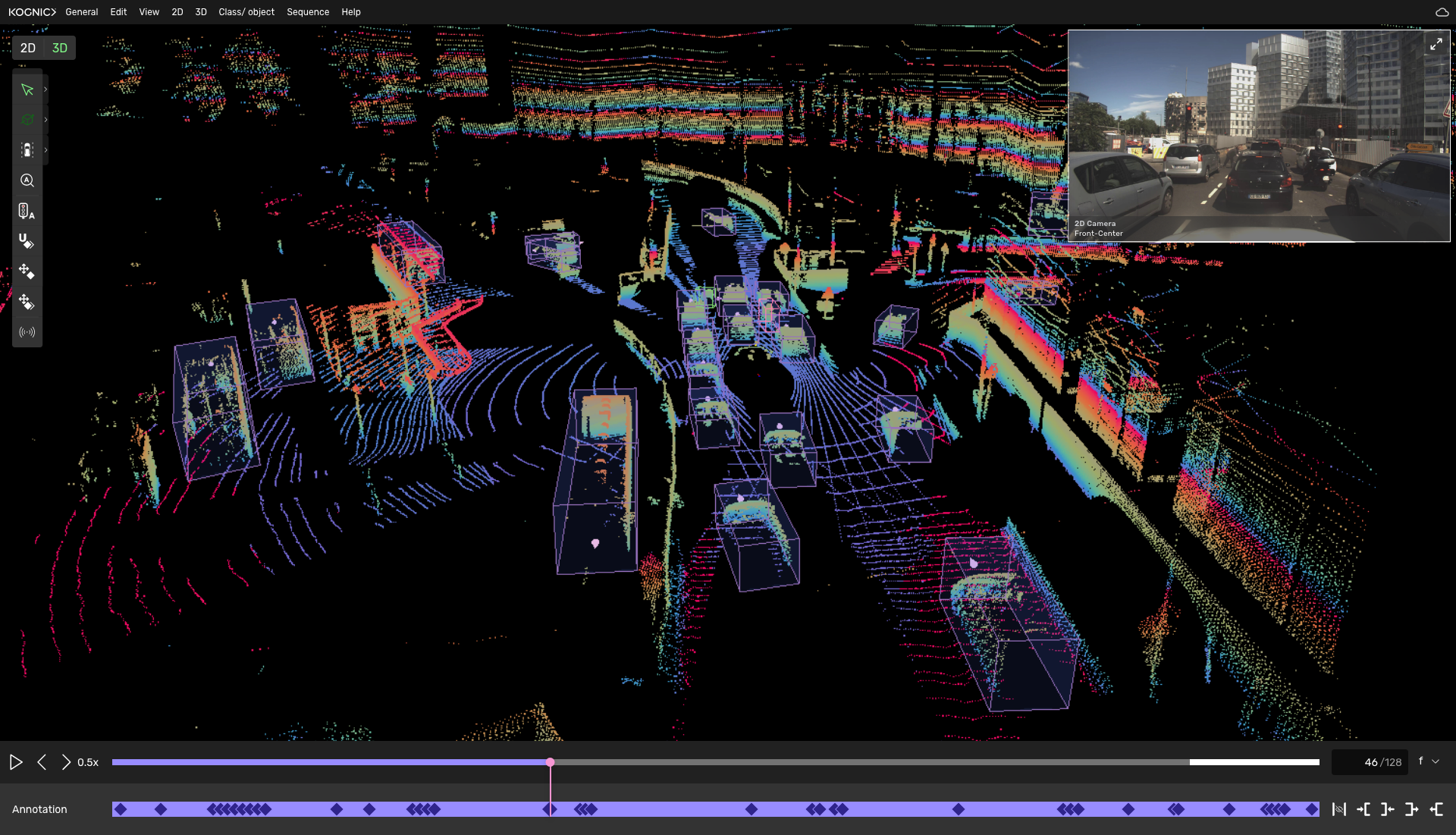

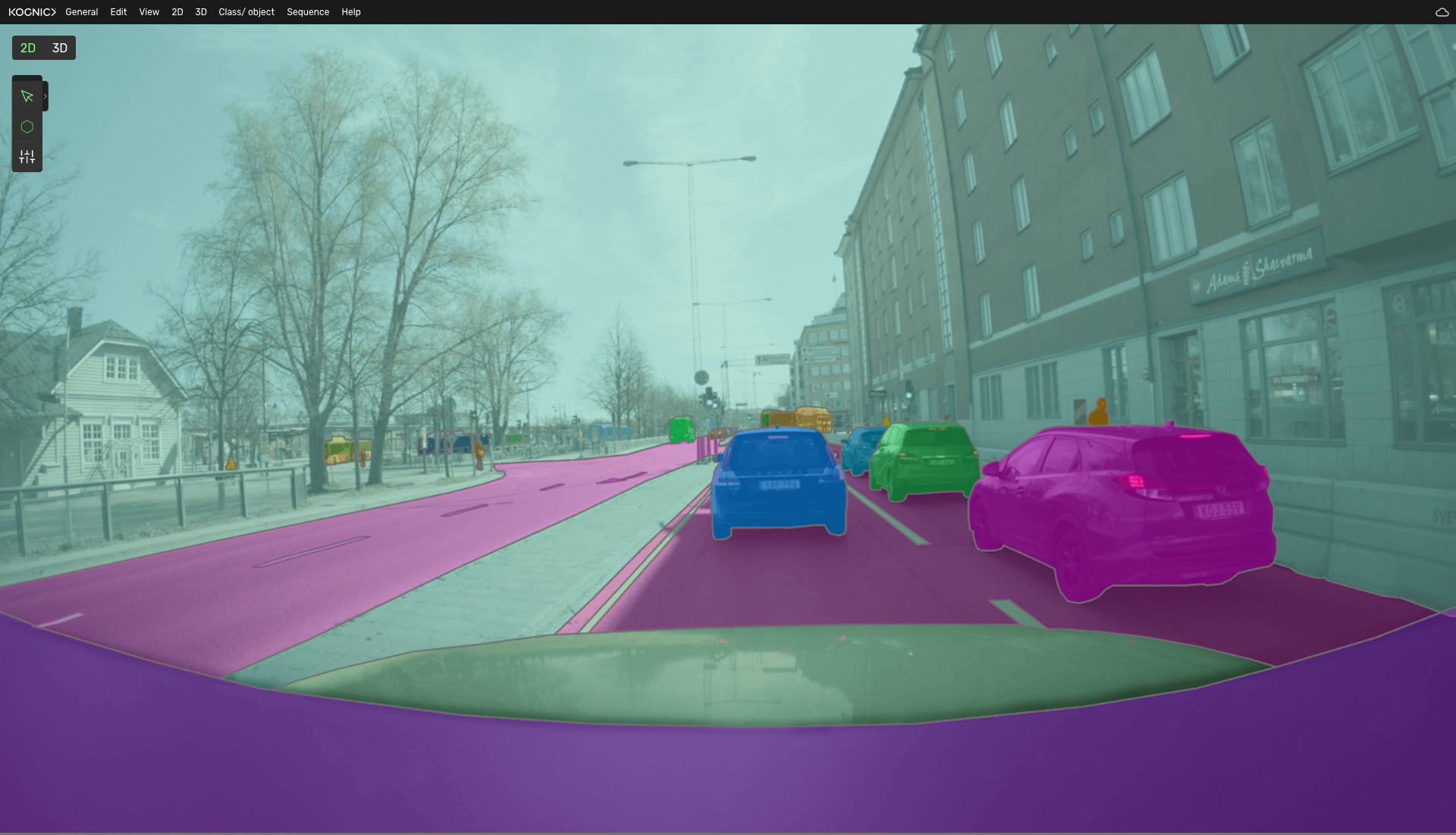

Daniel Langkilde, CEO of Kognic joins me on the AI in Automotive Podcast to discuss exactly this. Daniel and I talk about the current approach to autonomy, which involves breaking down a very complex problem into its components - perception, prediction and planning - and its limitations. Based on a better understanding of how humans actually go about accomplishing the task of driving, we ask if perhaps it is time to take a different approach to delivering autonomy at scale. We discuss a key component of this approach - the world model - or the ‘common sense’ that a machine must be equipped with to make sense of the complex world around it. Daniel also talks about alignment, what it means to steer a system towards accomplishing its stated goal, and its relevance to autonomous driving.

I am convinced that we are far from done with solving autonomy. On the contrary, I feel there is a lot of unexplored territory yet, which can dramatically change how we approach this opportunity. I hope my chat with Daniel gave you a sneak peek into what the inception of paradigm shifts looks like, and what it means for the future of autonomous driving. If you enjoyed listening to this episode of the AI in Automotive Podcast, do share it with a friend or colleague, and rate it wherever you get your podcasts.

#ai #automotive #mobility #technology #podcast #autonomousdriving #alignment #worldmodel